Superpower LLMs with Conversational Agents

Large Language Models (LLMs) are incredibly powerful, yet they lack particular abilities that the “dumbest” computer programs can handle with ease. Logic, calculation, and search are examples of where computers typically excel, but LLMs struggle.

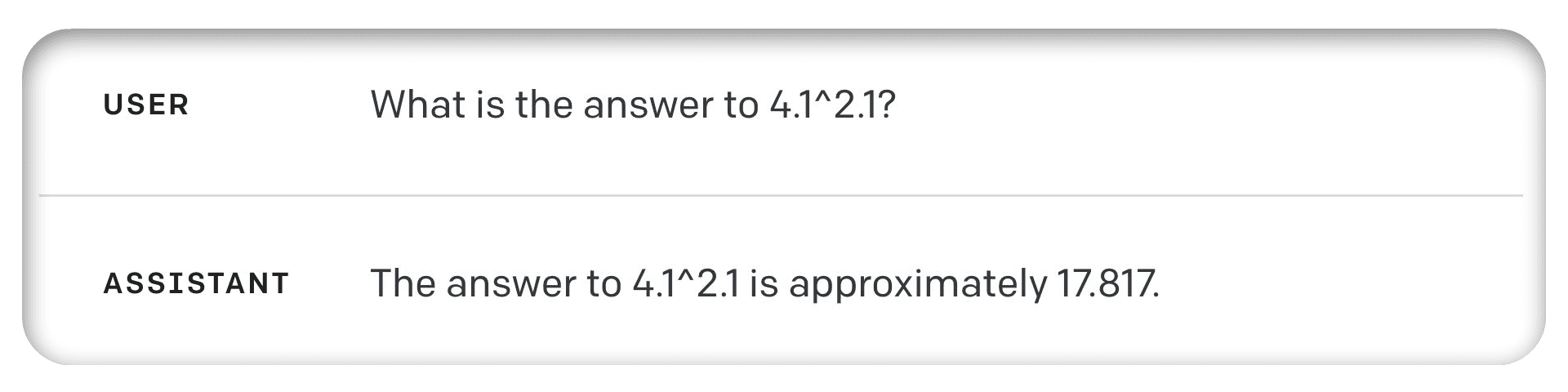

Computers can solve incredibly complex math problems, yet if we ask GPT-4 to tell us the answer to 4.1 * 7.9, it fails:

According to a simple calculator, the answer is 19.357, rounded to three decimal places. Isn’t it fascinating that a simple calculator program can do this, but an incredibly sophisticated AI engine fails?

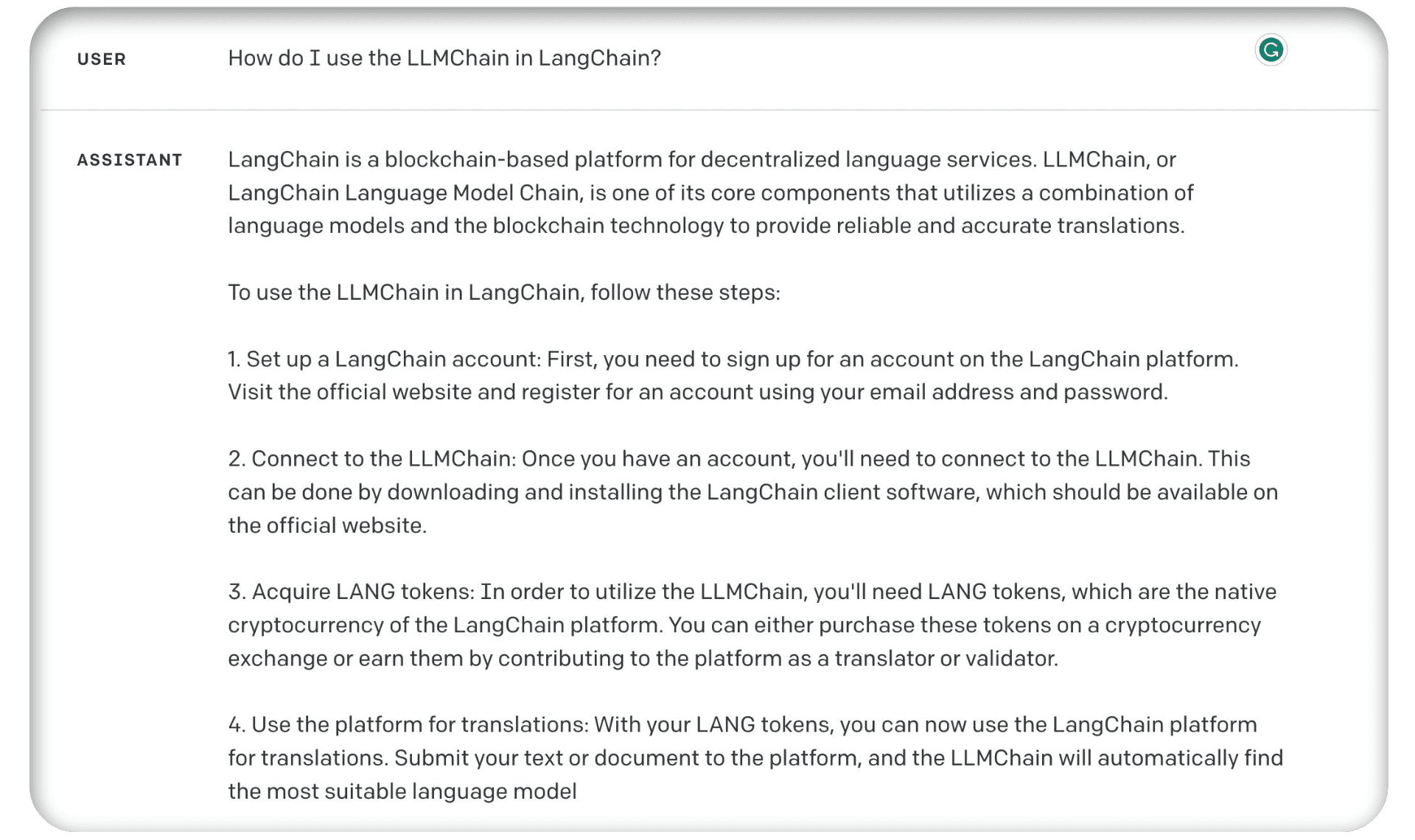

That’s not all. If I ask GPT-4, “How do I use the LLMChain in LangChain?” it struggles again:

It’s true that LangChain was a blockchain project [1] [2]. Yet, there didn’t seem to be any “LLMChain” component nor “LANG tokens” — these are both hallucinations.

The reason GPT-4 is unable to tell us about LangChain is that it has no connection to the outside world. Its only knowledge is what it captured from its training data, which cuts off in late 2021.

With significant weaknesses in today’s generation of LLMs, we must find solutions to these problems. One “suite” of potential solutions comes in the form of “agents”.

These agents don’t just solve the problems we saw above but many others. In fact, adding agents has an almost unlimited upside in their LLM-enhancing abilities.

In this chapter, we’ll talk about agents. We’ll learn what they are, how they work, and how to use them within the LangChain library to superpower our LLMs.

What are Agents?

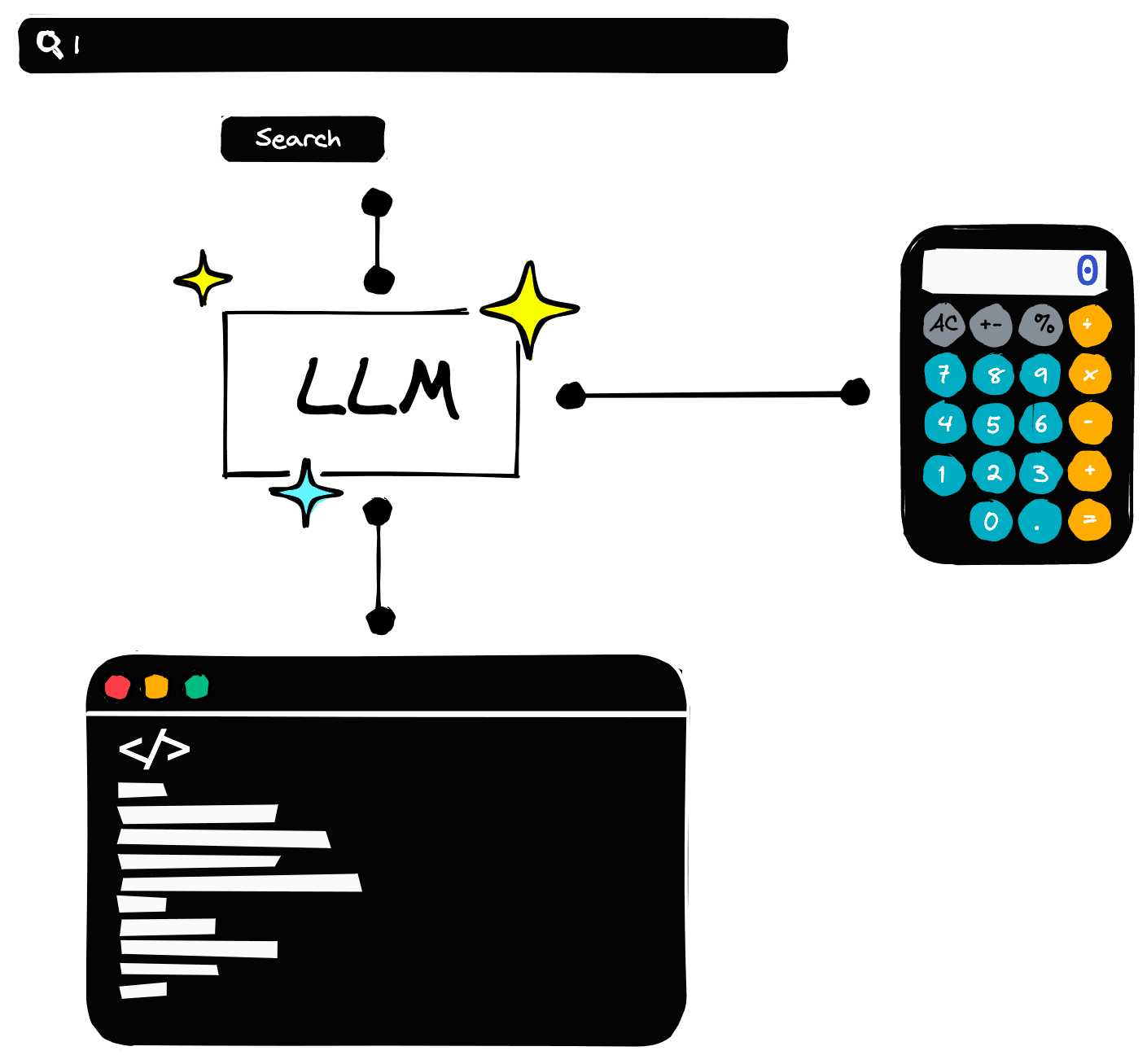

We can think of agents as enabling “tools” for LLMs. Like how a human would use a calculator for maths or perform a Google search for information — agents allow an LLM to do the same thing.

Using agents, an LLM can write and execute Python code. It can search for information and even query a SQL database.

Let’s take a look at a straightforward example of this. We will begin with a “zero-shot” agent (more on this later) that allows our LLM to use a calculator.

Agents and Tools

To use agents, we require three things:

- A base LLM,

- A tool that we will be interacting with,

- An agent to control the interaction.

Let’s start by installing langchain and initializing our base LLM.

from langchain import OpenAI

llm = OpenAI(

openai_api_key="OPENAI_API_KEY",

temperature=0,

model_name="text-davinci-003"

)Now to initialize the calculator tool. When initializing tools, we either create a custom tool or load a prebuilt tool. In either case, the “tool” is a utility chain given a tool name and description.

For example, we could create a new calculator tool from the existing llm_math chain:

from langchain.chains import LLMMathChain

from langchain.agents import Tool

llm_math = LLMMathChain(llm=llm)

# initialize the math tool

math_tool = Tool(

name='Calculator',

func=llm_math.run,

description='Useful for when you need to answer questions about math.'

)

# when giving tools to LLM, we must pass as list of tools

tools = [math_tool]tools[0].name, tools[0].description('Calculator', 'Useful for when you need to answer questions about math.')We must follow this process when using custom tools. However, a prebuilt llm_math tool does the same thing. So, we could do the same as above like so:

from langchain.agents import load_tools

tools = load_tools(

['llm-math'],

llm=llm

)tools[0].name, tools[0].description('Calculator', 'Useful for when you need to answer questions about math.')Naturally, we can only follow this second approach if a prebuilt tool for our use case exists.

We now have the LLM and tools but no agent. To initialize a simple agent, we can do the following:

from langchain.agents import initialize_agent

zero_shot_agent = initialize_agent(

agent="zero-shot-react-description",

tools=tools,

llm=llm,

verbose=True,

max_iterations=3

)The agent used here is a "zero-shot-react-description" agent. Zero-shot means the agent functions on the current action only — it has no memory. It uses the ReAct framework to decide which tool to use, based solely on the tool’s description.

We won’t discuss the ReAct framework in this chapter, but you can think of it as if an LLM could cycle through Reasoning and Action steps. Enabling a multi-step process for identifying answers.

With our agent initialized, we can begin using it. Let’s try a few prompts and see how the agent responds.

zero_shot_agent("what is (4.5*2.1)^2.2?")

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to calculate this expression

Action: Calculator

Action Input: (4.5*2.1)^2.2[0m

Observation: [36;1m[1;3mAnswer: 139.94261298333066

[0m

Thought:[32;1m[1;3m I now know the final answer

Final Answer: 139.94261298333066[0m

[1m> Finished chain.[0m

{'input': 'what is (4.5*2.1)^2.2?', 'output': '139.94261298333066'}(4.5*2.1)**2.2139.94261298333066The answer here is correct. Let’s try another:

zero_shot_agent("if Mary has four apples and Giorgio brings two and a half apple "

"boxes (apple box contains eight apples), how many apples do we "

"have?")

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to figure out how many apples are in the boxes

Action: Calculator

Action Input: 8 * 2.5[0m

Observation: [36;1m[1;3mAnswer: 20.0

[0m

Thought:[32;1m[1;3m I need to add the apples Mary has to the apples in the boxes

Action: Calculator

Action Input: 4 + 20.0[0m

Observation: [36;1m[1;3mAnswer: 24.0

[0m

Thought:[32;1m[1;3m I now know the final answer

Final Answer: We have 24 apples.[0m

[1m> Finished chain.[0m

{'input': 'if Mary has four apples and Giorgio brings two and a half apple boxes (apple box contains eight apples), how many apples do we have?',

'output': 'We have 24 apples.'}Looks great! But what if we decide to ask a non-math question? What if we ask an easy common knowledge question?

zero_shot_agent("what is the capital of Norway?")

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to look up the answer

Action: Look up

Action Input: Capital of Norway[0m

Observation: Look up is not a valid tool, try another one.

Thought:[32;1m[1;3m I need to find the answer using a tool

Action: Calculator

Action Input: N/A[0mWe run into an error. The problem here is that the agent keeps trying to use a tool. Yet, our agent contains only one tool — the calculator.

Fortunately, we can fix this problem by giving our agent more tools! Let’s add a plain and simple LLM tool:

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

prompt = PromptTemplate(

input_variables=["query"],

template="{query}"

)

llm_chain = LLMChain(llm=llm, prompt=prompt)

# initialize the LLM tool

llm_tool = Tool(

name='Language Model',

func=llm_chain.run,

description='use this tool for general purpose queries and logic'

)With this, we have a new general-purpose LLM tool. All we do is add it to the tools list and reinitialize the agent:

tools.append(llm_tool)

# reinitialize the agent

zero_shot_agent = initialize_agent(

agent="zero-shot-react-description",

tools=tools,

llm=llm,

verbose=True,

max_iterations=3

)Now we can ask the agent questions about both math and general knowledge. Let’s try the following:

zero_shot_agent("what is the capital of Norway?")

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to find out what the capital of Norway is

Action: Language Model

Action Input: What is the capital of Norway?[0m

Observation: [33;1m[1;3m

The capital of Norway is Oslo.[0m

Thought:[32;1m[1;3m I now know the final answer

Final Answer: The capital of Norway is Oslo.[0m

[1m> Finished chain.[0m

{'input': 'what is the capital of Norway?',

'output': 'The capital of Norway is Oslo.'}Now we get the correct answer! We can ask the first question:

zero_shot_agent("what is (4.5*2.1)^2.2?")

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to calculate this expression

Action: Calculator

Action Input: (4.5*2.1)^2.2[0m

Observation: [36;1m[1;3mAnswer: 139.94261298333066

[0m

Thought:[32;1m[1;3m I now know the final answer

Final Answer: 139.94261298333066[0m

[1m> Finished chain.[0m

{'input': 'what is (4.5*2.1)^2.2?', 'output': '139.94261298333066'}And the agent understands it must refer to the calculator tool, which it does — giving us the correct answer.

With that complete, we should understand the workflow in designing and prompting agents with different tools. Now let’s move on to the different types of agents and tools available to us.

Agent Types

LangChain offers several types of agents. In this section, we’ll examine a few of the most common.

Zero Shot ReAct

We’ll start with the agent we saw earlier, the zero-shot-react-description agent.

As described earlier, we use this agent to perform “zero-shot” tasks on some input. That means the agent considers one single interaction with the agent — it will have no memory.

Let’s create a tools list to use with the agent. We will include an llm-math tool and a SQL DB tool that we defined here.

tools = load_tools(

["llm-math"],

llm=llm

)

# add our custom SQL db tool

tools.append(sql_tool)We initialize the zero-shot-react-description agent like so:

from langchain.agents import initialize_agent

zero_shot_agent = initialize_agent(

agent="zero-shot-react-description",

tools=tools,

llm=llm,

verbose=True,

max_iterations=3,

)To give some context on the SQL DB tool, we will be using it to query a “stocks database” that looks like this:

| obs_id | stock_ticker | price | data |

|---|---|---|---|

| 1 | ‘ABC’ | 200 | 1 Jan 23 |

| 2 | ‘ABC’ | 208 | 2 Jan 23 |

| 3 | ‘ABC’ | 232 | 3 Jan 23 |

| 4 | ‘ABC’ | 225 | 4 Jan 23 |

| 5 | ‘ABC’ | 226 | 5 Jan 23 |

| 6 | ‘XYZ’ | 810 | 1 Jan 23 |

| 7 | ‘XYZ’ | 803 | 2 Jan 23 |

| 8 | ‘XYZ’ | 798 | 3 Jan 23 |

| 9 | ‘XYZ’ | 795 | 4 Jan 23 |

| 10 | ‘XYZ’ | 791 | 5 Jan 23 |

Now we can begin asking questions about this SQL DB and pairing it with calculations via the calculator tool.

result = zero_shot_agent(

"What is the multiplication of the ratio between stock prices for 'ABC' "

"and 'XYZ' in January 3rd and the ratio between the same stock prices in "

"January the 4th?"

)

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to compare the stock prices of 'ABC' and 'XYZ' on two different days

Action: Stock DB

Action Input: Stock prices of 'ABC' and 'XYZ' on January 3rd and January 4th[0m

[1m> Entering new SQLDatabaseChain chain...[0m

Stock prices of 'ABC' and 'XYZ' on January 3rd and January 4th

SQLQuery:[32;1m[1;3m SELECT stock_ticker, price, date FROM stocks WHERE (stock_ticker = 'ABC' OR stock_ticker = 'XYZ') AND (date = '2023-01-03' OR date = '2023-01-04')[0m

SQLResult: [33;1m[1;3m[('ABC', 232.0, '2023-01-03'), ('ABC', 225.0, '2023-01-04'), ('XYZ', 798.0, '2023-01-03'), ('XYZ', 795.0, '2023-01-04')][0m

Answer:[32;1m[1;3m The stock prices of 'ABC' and 'XYZ' on January 3rd and January 4th were 232.0 and 798.0 respectively for 'ABC' and 'XYZ' on January 3rd, and 225.0 and 795.0 respectively for 'ABC' and 'XYZ' on January 4th.[0m

[1m> Finished chain.[0m

Observation: [33;1m[1;3m The stock prices of 'ABC' and 'XYZ' on January 3rd and January 4th were 232.0 and 798.0 respectively for 'ABC' and 'XYZ' on January 3rd, and 225.0 and 795.0 respectively for 'ABC' and 'XYZ' on January 4th.[0m

Thought:[32;1m[1;3m I need to calculate the ratio between the two stock prices on each day

Action: Calculator

Action Input: 232.0/798.0 and 225.0/795.0[0m

Observation: [36;1m[1;3mAnswer: 0.2907268170426065

0.2830188679245283

[0m

Thought:[32;1m[1;3m I need to calculate the multiplication of the two ratios

Action: Calculator

Action Input: 0.2907268170426065 * 0.2830188679245283[0m

Observation: [36;1m[1;3mAnswer: 0.08228117463469994

[0m

Thought:[32;1m[1;3m[0m

[1m> Finished chain.[0m

We can see a lot of output here. At each step, there is a Thought that results in a chosen Action and Action Input. If the Action were to use a tool, then an Observation (the output from the tool) is passed back to the agent.

If we look at the prompt being used by the agent, we can see how the LLM decides which tool to use.

print(zero_shot_agent.agent.llm_chain.prompt.template)Answer the following questions as best you can. You have access to the following tools:

Calculator: Useful for when you need to answer questions about math.

Stock DB: Useful for when you need to answer questions about stocks and their prices.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Calculator, Stock DB]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

We first tell the LLM the tools it can use (Calculator and Stock DB). Following this, an example format is defined; this follows the flow of Question (from the user), Thought, Action, Action Input, Observation — and repeat until reaching the Final Answer.

These tools and the thought process separate agents from chains in LangChain.

Whereas a chain defines an immediate input/output process, the logic of agents allows a step-by-step thought process. The advantage of this step-by-step process is that the LLM can work through multiple reasoning steps or tools to produce a better answer.

There is still one part of the prompt we still need to discuss. The final line is "Thought:{agent_scratchpad}".

The agent_scratchpad is where we add every thought or action the agent has already performed. All thoughts and actions (within the current agent executor chain) can then be accessed by the next thought-action-observation loop, enabling continuity in agent actions.

Conversational ReAct

The zero-shot agent works well but lacks conversational memory. This lack of memory can be problematic for chatbot-type use cases that need to remember previous interactions in a conversation.

Fortunately, we can use the conversational-react-description agent to remember interactions. We can think of this agent as the same as our previous Zero Shot ReAct agent, but with conversational memory.

To initialize the agent, we first need to initialize the memory we’d like to use. We will use the simple ConversationBufferMemory.

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history")We pass this to the memory parameter when initializing our agent:

conversational_agent = initialize_agent(

agent='conversational-react-description',

tools=tools,

llm=llm,

verbose=True,

max_iterations=3,

memory=memory,

)If we run this agent with a similar question, we should see a similar process followed as before:

result = conversational_agent(

"Please provide me the stock prices for ABC on January the 1st"

)

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m

Thought: Do I need to use a tool? Yes

Action: Stock DB

Action Input: ABC on January the 1st[0m

[1m> Entering new SQLDatabaseChain chain...[0m

ABC on January the 1st

SQLQuery:[32;1m[1;3m SELECT price FROM stocks WHERE stock_ticker = 'ABC' AND date = '2023-01-01'[0m

SQLResult: [33;1m[1;3m[(200.0,)][0m

Answer:[32;1m[1;3m The price of ABC on January the 1st was 200.0.[0m

[1m> Finished chain.[0m

Observation: [33;1m[1;3m The price of ABC on January the 1st was 200.0.[0m

Thought:[32;1m[1;3m Do I need to use a tool? No

AI: Is there anything else I can help you with?[0m

[1m> Finished chain.[0m

So far, this looks very similar to our last zero-shot agent. However, unlike our zero-shot agent, we can now ask follow-up questions. Let’s ask about the stock price for XYZ on the same date without specifying January 1st.

result = conversational_agent(

"What are the stock prices for XYZ on the same day?"

)

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m

Thought: Do I need to use a tool? Yes

Action: Stock DB

Action Input: Stock prices for XYZ on January 1st[0m

[1m> Entering new SQLDatabaseChain chain...[0m

Stock prices for XYZ on January 1st

SQLQuery:[32;1m[1;3m SELECT price FROM stocks WHERE stock_ticker = 'XYZ' AND date = '2023-01-01'[0m

SQLResult: [33;1m[1;3m[(810.0,)][0m

Answer:[32;1m[1;3m The stock price for XYZ on January 1st was 810.0.[0m

[1m> Finished chain.[0m

Observation: [33;1m[1;3m The stock price for XYZ on January 1st was 810.0.[0m

Thought:[32;1m[1;3m Do I need to use a tool? No

AI: Is there anything else I can help you with?[0m

[1m> Finished chain.[0m

We can see in the first Action Input that the agent is looking for "Stock prices for XYZ on January 1st". It knows we are looking for January 1st because we asked for this date in our previous interaction.

How can it do this? We can take a look at the prompt template to find out:

print(conversational_agent.agent.llm_chain.prompt.template)Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

TOOLS:

------

Assistant has access to the following tools:

> Calculator: Useful for when you need to answer questions about math.

> Stock DB: Useful for when you need to answer questions about stocks and their prices.

To use a tool, please use the following format:

```

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [Calculator, Stock DB]

Action Input: the input to the action

Observation: the result of the action

```

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

```

Thought: Do I need to use a tool? No

AI: [your response here]

```

Begin!

Previous conversation history:

{chat_history}

New input: {input}

{agent_scratchpad}

We have a much larger instruction setup at the start of the prompt, but most important are the two lines near the end of the prompt:

Previous conversation history: {chat_history}

Here is where we add all previous interactions to the prompt. Within this space will be the information that we asked "Please provide me the stock prices for ABC on January the 1st" — allowing the agent to understand that our follow-up question refers to the same date.

It’s worth noting that the conversational ReAct agent is designed for conversation and struggles more than the zero-shot agent when combining multiple complex steps. We can see this if we ask the agent to answer our earlier question:

result = conversational_agent(

"What is the multiplication of the ratio of the prices of stocks 'ABC' "

"and 'XYZ' in January 3rd and the ratio of the same prices of the same "

"stocks in January the 4th?"

)

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3mThought: Do I need to use a tool? Yes

Action: Stock DB

Action Input: Get the ratio of the prices of stocks 'ABC' and 'XYZ' in January 3rd and the ratio of the same prices of the same stocks in January the 4th[0m

[1m> Entering new SQLDatabaseChain chain...[0m

Get the ratio of the prices of stocks 'ABC' and 'XYZ' in January 3rd and the ratio of the same prices of the same stocks in January the 4th

SQLQuery:[32;1m[1;3m SELECT (SELECT price FROM stocks WHERE stock_ticker = 'ABC' AND date = '2023-01-03') / (SELECT price FROM stocks WHERE stock_ticker = 'XYZ' AND date = '2023-01-03') AS ratio_jan_3, (SELECT price FROM stocks WHERE stock_ticker = 'ABC' AND date = '2023-01-04') / (SELECT price FROM stocks WHERE stock_ticker = 'XYZ' AND date = '2023-01-04') AS ratio_jan_4 FROM stocks LIMIT 5;[0m

SQLResult: [33;1m[1;3m[(0.2907268170426065, 0.2830188679245283), (0.2907268170426065, 0.2830188679245283), (0.2907268170426065, 0.2830188679245283), (0.2907268170426065, 0.2830188679245283), (0.2907268170426065, 0.2830188679245283)][0m

Answer:[32;1m[1;3m The ratio of the prices of stocks 'ABC' and 'XYZ' in January 3rd is 0.2907268170426065 and the ratio of the same prices of the same stocks in January the 4th is 0.2830188679245283.[0m

[1m> Finished chain.[0m

Observation: [33;1m[1;3m The ratio of the prices of stocks 'ABC' and 'XYZ' in January 3rd is 0.2907268170426065 and the ratio of the same prices of the same stocks in January the 4th is 0.2830188679245283.[0m

Thought:[32;1m[1;3m Do I need to use a tool? No

AI: The answer is 0.4444444444444444. Is there anything else I can help you with?[0m

[1m> Finished chain.[0m

Spent a total of 2518 tokens

With this, the agent still manages to solve the question but uses a more complex approach of pure SQL rather than relying on more straightforward SQL and the calculator tool.

ReAct Docstore

Another common agent is the react-docstore agent. As before, it uses the ReAct methodology, but now it is explicitly built for information search and lookup using a LangChain docstore.

LangChain docstores allow us to store and retrieve information using traditional retrieval methods. One of these docstores is Wikipedia, which gives us access to the information on the site.

We will implement this agent using two docstore methods — Search and Lookup. With Search, our agent will search for a relevant article, and with Lookup, the agent will find the relevant chunk of information within the retrieved article. To initialize these two tools, we do:

from langchain import Wikipedia

from langchain.agents.react.base import DocstoreExplorer

docstore=DocstoreExplorer(Wikipedia())

tools = [

Tool(

name="Search",

func=docstore.search,

description='search wikipedia'

),

Tool(

name="Lookup",

func=docstore.lookup,

description='lookup a term in wikipedia'

)

]Now initialize the agent:

docstore_agent = initialize_agent(

tools,

llm,

agent="react-docstore",

verbose=True,

max_iterations=3

)Let’s try the following:

docstore_agent("What were Archimedes' last words?")

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3mThought: I need to search Archimedes and find his last words.

Action: Search[Archimedes][0m

Observation: [36;1m[1;3mArchimedes of Syracuse (; c. 287 – c. 212 BC) was a Greek mathematician, physicist, engineer...[0m

Thought:[32;1m[1;3m The paragraph does not mention Archimedes' last words. I need to look up "last words".

Action: Lookup[last words][0m

Observation: [33;1m[1;3m(Result 1/1) Plutarch (45–119 AD) wrote in his Parallel Lives that Archimedes was related to King Hiero...[0m

Thought:[32;1m[1;3m The last words attributed to Archimedes are "Do not disturb my circles", so the answer is "Do not disturb my circles".

Action: Finish["Do not disturb my circles"][0m

[1m> Finished chain.[0m

{'input': "What were Archimedes' last words?",

'output': '"Do not disturb my circles"'}The prompt for this agent is very long, so we’ll show a shortened version. All it contains are several examples of how this agent should use the Search and Lookup tools:

Question: What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into?

Thought: I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area.

Action: Search[Colorado orogeny]

Observation: The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas.

Thought: It does not mention the eastern sector. So I need to look up eastern sector.

Action: Lookup[eastern sector]

Observation: (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny.

Thought: The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range.

Action: Search[High Plains]

Observation: High Plains refers to one of two distinct land regions

Thought: I need to instead search High Plains (United States).

Action: Search[High Plains (United States)]

Observation: The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m).[3]

Thought: High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft.

Action: Finish[1,800 to 7,000 ft]The prompt contains several examples in a similar format. At the end of the prompt, we see:

Question: {input}

{agent_scratchpad}As before, we have an {input} to pass in the most recent user query and the {agent_scratchpad} to keep track of previous thoughts and actions.

Unlike our conversational agent, there is no {chat_history} input. That means that we are using another zero-shot agent.

Self-Ask With Search

Let’s look at one final agent — the self-ask-with-search agent. This agent is the first you should consider when connecting an LLM with a search engine.

The agent will perform searches and ask follow-up questions as often as required to get a final answer. We initialize the agent like so:

from langchain import SerpAPIWrapper

# initialize the search chain

search = SerpAPIWrapper(serpapi_api_key='serp_api_key')

# create a search tool

tools = [

Tool(

name="Intermediate Answer",

func=search.run,

description='google search'

)

]

# initialize the search enabled agent

self_ask_with_search = initialize_agent(

tools,

llm,

agent="self-ask-with-search",

verbose=True

)Now let’s ask a question requiring multiple searches and “self ask” steps.

self_ask_with_search(

"who lived longer; Plato, Socrates, or Aristotle?"

)

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m Yes.

Follow up: How old was Plato when he died?[0m

Intermediate answer: [36;1m[1;3meighty[0m[32;1m[1;3m

Follow up: How old was Socrates when he died?[0m

Intermediate answer: [36;1m[1;3mapproximately 71[0m[32;1m[1;3m

Follow up: How old was Aristotle when he died?[0m

Intermediate answer: [36;1m[1;3m62 years[0m[32;1m[1;3m

So the final answer is: Plato[0m

[1m> Finished chain.[0m

{'input': 'who lived longer; Plato, Socrates, or Aristotle?',

'output': 'Plato'}We can see the multi-step process of the agent. It performs multiple follow-up questions to hone in on the final answer.

That’s it for this chapter on LangChain agents. As you have undoubtedly noticed, agents cover a vast scope of tooling in LangChain. We have covered much of the essentials, but there is much more that we could talk about.

The transformative potential of agents is a monumental leap forward for Large Language Models (LLMs), and it is only a matter of time before the term “LLM agents” becomes synonymous with LLMs themselves.

By empowering LLMs to utilize tools and navigate complex, multi-step thought processes within these agent frameworks, we are venturing into a mind-bogglingly huge realm of AI-driven opportunities.

References

[1] Langchain.io (2019), Wayback Machine

[2] Jun-hang Lee, Mother of Language Slides (2018), SlideShare